- OPEN SOURCE FLEXLM HOW TO

- OPEN SOURCE FLEXLM INSTALL

- OPEN SOURCE FLEXLM VERIFICATION

- OPEN SOURCE FLEXLM LICENSE

- OPEN SOURCE FLEXLM DOWNLOAD

One daemon is called lmgrd the other is the Rational vendor daemon.

OPEN SOURCE FLEXLM LICENSE

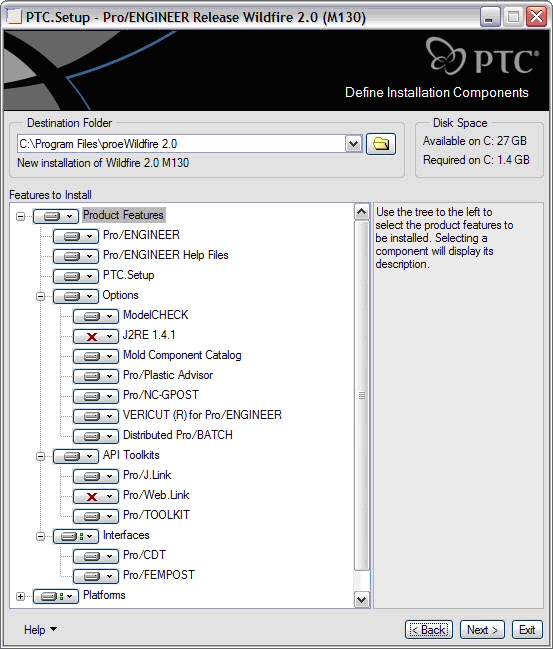

The Rational License Server versions 7.0 and lower consists of two daemons (or processes) to manage floating licenses. Refer to the various sections (tutorial/security/analytics. Fair Share is is configured at the queue level (so you can have one queue using FIFO and another one Fair Share) And more. Job that belong to the user with the highest score will start next. To simplify troubleshooting, all these errors are reported on the web interface Custom fair-share ¶Įach user is given a score which vary based on: After 30 minutes, and if the capacity is still not available, Scale-Out Computing on AWS will automatically reset the request and try to provision capacity in a different availability zone.

In this case, Scale-Out Computing on AWS will try to provision the capacity for 30 minutes. However, it may happen than AWS can't fullfill all requests (eg: need 5 instances but only 3 can be provisioned due to capacity shortage within a placement group). Scale-Out Computing on AWS performs various dry run checks before provisioning the capacity. Scale-Out Computing on AWS includes a FlexLM-enabled script which calculate the number of licenses for a given features and only start the job/provision the capacity when enough licenses are available. Scale-Out Computing on AWS automatically backup your data with no additional effort required on your side. By default Scale-Out Computing on AWS includes a default LDAP account provisioned during installation as well as a "Sudoers" LDAP group which manage SUDO permission on the cluster. Refer to this page to learn more about the various storage options offered by Scale-Out Computing on AWS Centralized user-management ¶Ĭustomers can create unlimited LDAP users and groups. Customers also have the ability to deploy high-speed SSD EBS disks or FSx for Lustre as scratch location on their compute nodes. Scale-Out Computing on AWS includes two unlimited EFS storage (/apps and /data). More importantly, the entire Scale-Out Computing on AWS codebase is open-source and available on Github. Most of the logic is based of CloudFormation templates, shell scripts and python code. Scale-Out Computing on AWS is built entirely on top of AWS and can be customized by users as needed. Scale-Out Computing on AWS includes dashboard examples if you are not familiar with OpenSearch (formerly Elasticsearch) or Kibana. Scale-Out Computing on AWS includes OpenSearch (formerly Elasticsearch) and automatically ingest job and hosts data in real-time for accurate visualization of your cluster activity. Refer to this link for all best practices in order to control your HPC cost on AWS and prevent overspend. Lastly, Scale-Out Computing on AWS let you create queue ACLs or instance restriction at a queue level. To prevent over-spend, Scale-Out Computing on AWS includes hooks to restrict job submission when customer-defined budget has expired. Scale-Out Computing on AWS also supports AWS Budget and let you create budgets assigned to user/team/project or queue. You can review your HPC costs filtered by user/team/project/queue very easily using AWS Cost Explorer.

Users can submit/retrieve/delete jobs remotely via an HTTP REST API Budgets and Cost Management ¶

OPEN SOURCE FLEXLM DOWNLOAD

OPEN SOURCE FLEXLM VERIFICATION

Synopsys Physical Verification with ICV Tutorial Overview Submit your HPC job via a custom web interface

OPEN SOURCE FLEXLM HOW TO

How to easily share data with your virtual desktops Import custom AMI to provision capacity fasterĬreate Virtual Desktop Images for Windows/Linux

OPEN SOURCE FLEXLM INSTALL

Install your Scale-Out Computing on AWS clusterĪutomatic emails when your job start/stop (Legacy) Install your Scale-Out Computing on AWS cluster

Prevent user to change specific parametersĬonfigure custom Security Groups or IAM role Restrict provisioning of specific instance type Restrict number of concurrent jobs and/or instances Enable Oauth2 authentication with Cognito

0 kommentar(er)

0 kommentar(er)